Apache Airflow helps data engineers create, schedule, and monitor workflows. Whether it’s ETL processes, machine learning pipelines, or batch jobs, Airflow provides essential tools and flexibility. This article explores its features, architecture, use cases, and best practices.

Key Takeaways

Apache Airflow is a powerful platform for designing, scheduling, and monitoring complex data workflows, integrating seamlessly with disparate systems through Python code.

Key features of Apache Airflow include its Directed Acyclic Graphs (DAGs) for defining data pipelines, sophisticated task scheduling and dependency management, and comprehensive monitoring and alerting capabilities.

Despite its many strengths, such as high versatility and robust architecture, Apache Airflow faces challenges like handling streaming data, usability for non-developers, and a lack of native support for pipeline versioning.

Introduction

Envision a robust platform that empowers data engineers to author, schedule, and monitor workflows with finesse and flexibility—this is the essence of Apache Airflow. Since its inception in 2015 by Airbnb, Airflow has been a beacon of innovation in the open-source community, amassing a legion of contributors who continuously enhance its prowess.

With a design that emphasizes dependency-based declaration, Airflow provides a rich user interface that not only visualizes but also manages the success status of data pipelines, setting a new standard for local development in the data engineering landscape. By handling data pipelines dependencies efficiently, Airflow ensures a seamless workflow for data engineers.

Understanding Apache Airflow

Apache Airflow stands as a testament to the ingenuity of the Apache Software Foundation, offering a canvas where complex data workflows are meticulously painted with the strokes of Python code. At its core, Airflow is an airflow platform designed for the intricate dance of data pipeline orchestration, enabling the integration of disparate systems into cohesive and manageable airflow workflows.

Its growing airflow community has turned it into more than just a tool; it’s an ecosystem vibrant with collaboration and innovation, where airflow components and airflow architecture coalesce to fuel the data-driven enterprises of tomorrow.

Key Features of Apache Airflow

Standing at the helm of workflow orchestration, Apache Airflow boasts a suite of features that cater to the diverse and complex needs of modern data pipelines. It’s the flexibility of Python that allows users to programmatically author and schedule tasks, while the airflow environment thrives on the strength of its plugin architecture and Jinja templating, making Airflow a dynamic and versatile platform.

As we delve deeper, we uncover the building blocks of its power: Directed Acyclic Graphs (DAGs), task scheduling, and a comprehensive monitoring system.

Directed Acyclic Graphs (DAGs)

Directed Acyclic Graphs (DAGs) are the backbone of Airflow’s workflow orchestration, serving as a robust framework for defining data pipelines. Through DAGs, users can articulate the data flows and dependencies that dictate the execution of different tasks within a workflow, offering a transparent and controlled way to manage complex business logic.

The freedom to dynamically configure pipelines and the ability to manage an arbitrary number of tasks make DAGs a critical component in running successful airflow DAGs.

Task Scheduling and Dependency Management

The heart of Airflow lies in its ability to author, schedule, and monitor the tasks that form the essence of data management. With its sophisticated dependency management, Airflow ensures that upstream tasks are completed before downstream tasks commence, maintaining the integrity of the workflow orchestration.

From managing workflows of diverse complexities to triggering tasks at precisely the right moment, Airflow’s scheduling mechanisms are fine-tuned to handle an array of scenarios, making it an indispensable tool for data engineers and scientists alike.

Monitoring and Alerts

The transparency and control that Airflow provides extend to its monitoring capabilities, where every task’s progress can be meticulously tracked. It’s through Airflow’s web interface that users can delve into granular logging, ensuring that any issues are swiftly identified and addressed. Moreover, the integration with messaging services like Slack and email ensures that alerts are communicated in real-time, allowing teams to react promptly to any anomalies.

These alerting mechanisms, combined with the ability to define completion time limits, establish Airflow as a vigilant guardian of data pipelines, effectively monitoring data pipelines.

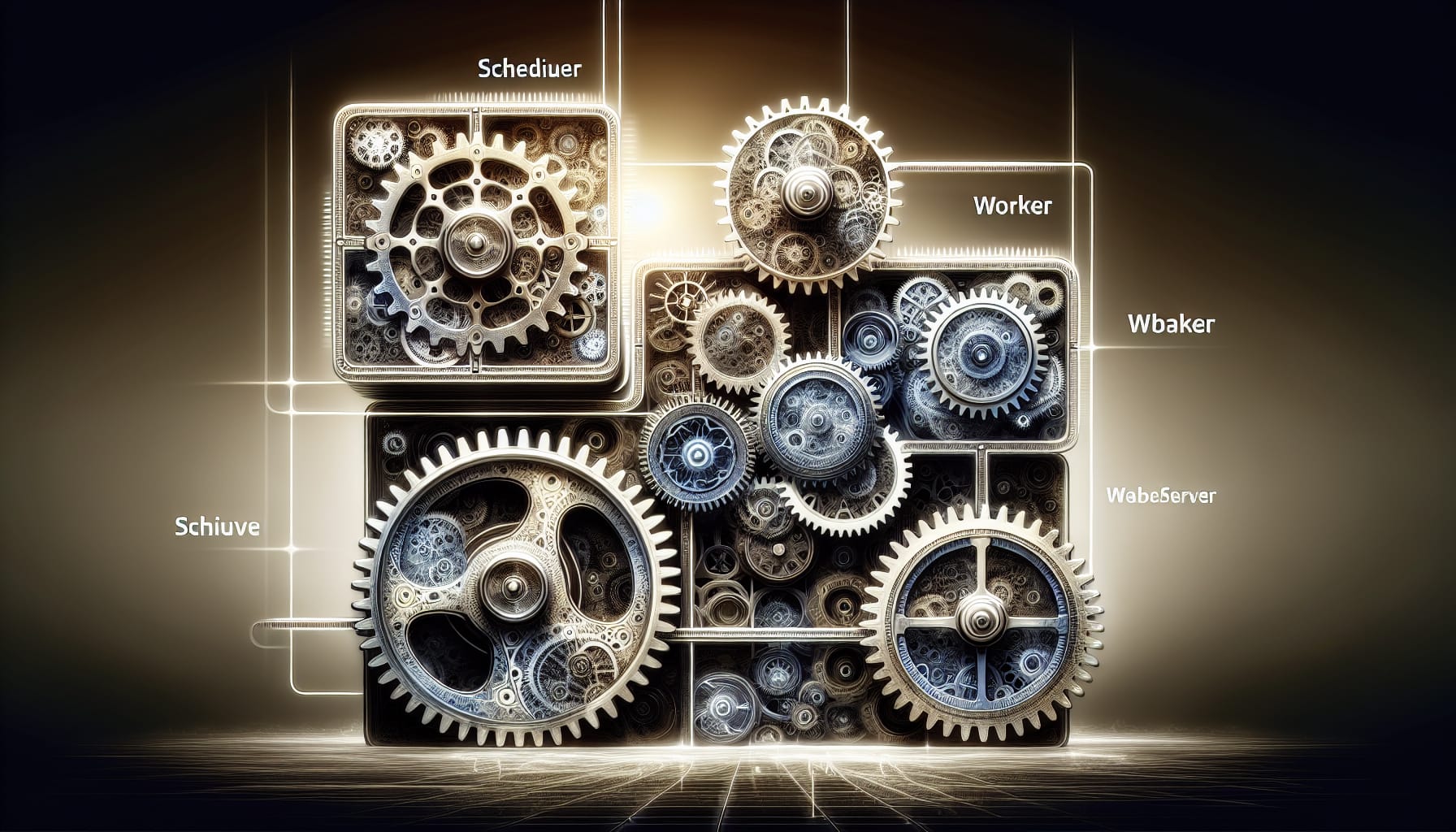

Apache Airflow Architecture

The architectural prowess of Apache Airflow is a marvel of modular design, where each component plays a pivotal role in orchestrating workflows. The scheduler, the maestro of timing, ensures that tasks are triggered and executed according to plan, while the workers, the diligent performers, carry out the tasks with precision.

The webserver acts as the stage, providing a user interface for overseeing the performance, and the metadata database, the repository of history, captures the state of each act. It’s this harmony between components that enables Airflow to manage complex data pipelines with grace and efficiency.

Common Use Cases for Apache Airflow

The versatility of Apache Airflow is best exemplified through its myriad of use cases, each showcasing its ability to tackle complex workflows with finesse. From orchestrating ETL processes to training machine learning models and managing batch processing jobs, Airflow serves as the linchpin that ensures data is not just processed, but harnessed to its full potential.

Let us explore how these common scenarios leverage the strengths of Airflow to achieve data engineering success.

Data Pipelines for ETL Processes

Data engineers and analysts often turn to Airflow for its capability to manage and monitor the ETL pipelines critical to data warehousing. By dynamically generating and instantiating pipelines, Airflow facilitates the movement of data from multiple sources, through various transformations, and into the data warehouse, ensuring that data practitioners can rely on accurate and up-to-date data sets for their analyses.

The platform’s user interface allows not just for visualization but for real-time interaction, making it an invaluable asset in the world of big data systems.

Machine Learning Model Training

In the domain of data science, Airflow proves its mettle by automating the lifecycle of machine learning models. From pre-processing data to training and evaluating models, and eventually deploying them, Airflow streamlines the entire process, enabling data scientists to focus on developing more accurate and impactful models.

The precision and efficiency afforded by well-orchestrated data pipelines in Airflow not only accelerates the model development cycle but also promotes the consistent reproducibility of results.

Batch Processing Jobs

Airflow’s scheduling prowess comes into play when managing batch processing jobs, where large volumes of data need to be processed at specific intervals. Its robust monitoring features ensure that tasks are executed reliably and on schedule, enabling businesses to handle their data processing needs with confidence.

Whether it’s running Spark jobs or executing Python scripts, Airflow provides the framework for seamless and timely execution of these critical operations.

Best Practices for Using Apache Airflow

To harness the full power of Apache Airflow, it is essential to adhere to a set of best practices that optimize the platform’s efficiency and maintainability. Whether it’s through modular code design, version control, or the setting of Service Level Agreements (SLAs), these tried and true methods ensure that workflows are not only effective but also resilient to change.

Let’s delve into the nuances of these practices and how they contribute to the success of Airflow projects.

Modular Code Design

The philosophy of modular code design in Airflow is akin to building with Legos—each block should be independent, reusable, and easy to test. By writing code in a modular fashion, data engineers can create robust pipelines that are easily scalable and maintainable, even as the complexity of business logic evolves.

This approach not only simplifies the development process but also enhances collaboration among team members, as each module can be developed and debugged in isolation.

Version Control for Workflows

In the fast-paced world of data engineering, the ability to track and manage changes in workflows is crucial, and this is where version control for Airflow pipelines shines. By syncing workflows with a Git repository, teams can maintain a clear history of changes, facilitating collaboration and enabling rollback functionalities when needed.

This level of control is indispensable for ensuring consistency in workflow execution and allows for the agile adaptation to new requirements or changes in data sources.

Setting Service Level Agreements (SLAs)

Service Level Agreements (SLAs) in Airflow act as a commitment to performance, dictating the expected completion times for tasks and workflows. By tracking these metrics, teams can proactively identify bottlenecks and optimize their pipelines for efficiency.

When SLAs are breached, notifications are promptly sent out, ensuring that any delays are addressed swiftly and maintaining the overall reliability of the data processing environment.

Integrating Apache Airflow with Other Tools

The true power of Apache Airflow is magnified when it is seamlessly integrated with a plethora of other tools and platforms, thus expanding its capabilities to meet the diverse needs of data teams. Whether it is connecting to third-party systems, leveraging big data platforms, or integrating with cloud services, Airflow’s extensible design makes it a central hub for data workflows.

These integrations not only enhance the platform’s functionality but also provide a unified interface for managing complex data pipelines across various environments.

Integration with Cloud Services

Airflow’s extensive support for cloud services such as AWS, Google Cloud, and Azure epitomizes its adaptability to different infrastructures. By leveraging hooks and operators designed for these platforms, users can integrate Airflow with cloud-based data lakes, storage solutions, and analytics services, enabling a seamless data flow across cloud ecosystems. This integration not only simplifies the management of complex workflows but also opens up new possibilities for scalable and resilient data processing in the cloud.

Using Airflow with GitHub

The convergence of Airflow with GitHub represents a paradigm of version control and automated workflow management. Storing DAGs in a GitHub repository allows for a collaborative approach to workflow development, where changes can be tracked, reviewed, and deployed with precision.

GitHub Actions further extend this capability by enabling continuous integration and delivery, ensuring that Airflow pipelines are always in sync with the latest code changes and tested for reliability.

Connecting to Databases and Data Warehouses

The connectivity prowess of Airflow is showcased through its ability to interface with a wide array of databases and data warehousing platforms. With hooks that cater to different database technologies, Airflow simplifies the extraction and transformation of data, forming a bridge between operational databases and analytical data warehouses like Snowflake and Redshift.

This integration is particularly beneficial for data engineers and analysts who rely on timely and accurate data for informed decision-making.

Advanced Features of Apache Airflow

Beyond its core functionalities, Apache Airflow offers a spectrum of advanced features that cater to the evolving needs of modern data pipelines. From the dynamic generation of tasks to the incorporation of event-driven workflows and the flexibility of REST APIs, these features not only enhance the platform’s versatility but also empower users to create custom solutions tailored to their specific use cases.

Dynamic Task Mapping

Dynamic task mapping in Apache Airflow enables a level of adaptability that is crucial for handling unpredictable data volumes and structures. By allowing tasks to be generated and adjusted at runtime based on external parameters or datasets, Airflow facilitates a responsive and efficient data processing strategy.

This capability of dynamic pipeline generation is particularly beneficial when the number of tasks is not known ahead of time, ensuring that pipelines can dynamically scale to meet the demands of the data.

Event-Driven Workflows

The agility of Apache Airflow is further amplified through its support for event-driven workflows, enabling pipelines to respond promptly to external stimuli. This reactivity is made possible by custom sensors that await the occurrence of specific events before triggering downstream tasks, ensuring that workflows are both adaptive and timely.

Such an approach is ideal for scenarios requiring immediate data processing in response to real-time events, offering a level of responsiveness that traditional batch processing cannot match.

REST API and Custom Plugins

The extensibility of Airflow is one of its most compelling attributes, with the platform’s REST API and custom plugins opening doors to endless possibilities. Users can leverage the REST API for programmatic control over DAGs, integrating Airflow seamlessly into their existing systems and automating workflows with ease.

Additionally, the ability to craft custom plugins means that any unique requirements or integrations can be catered to, ensuring that Airflow remains at the forefront of workflow orchestration technology.

Challenges and Limitations of Apache Airflow

Despite its many strengths, Apache Airflow has some challenges and limitations, particularly when it comes to handling streaming data and real-time analytics solutions. Some of these limitations include:

Lack of built-in support for streaming data processing

Limited support for real-time scheduling and monitoring

Difficulty in scaling horizontally for high-volume data processing

Recognizing these limitations is crucial for data engineers when evaluating the suitability of Airflow for their specific needs and workflows.

Let’s explore some of the challenges that users may encounter when working with Apache Airflow.

Handling Streaming Data

Apache Airflow’s design is inherently optimized for batch processing, which presents challenges when attempting to manage streaming data. Since Airflow relies on time-based scheduling rather than event-based triggers, it falls short in environments that demand real-time analytics or continuous data streams. For these scenarios, alternative tools such as Apache Kafka or Apache Flink are better suited to address the needs of streaming data solutions.

Usability for Non-Developers

Airflow’s steep learning curve can pose a barrier for non-developers or those without extensive Python knowledge. The platform’s reliance on programming to author and manage workflows means that users must be comfortable with code, which can be a significant challenge for individuals who are more accustomed to graphical interfaces or SQL-based tools.

This complexity often necessitates a higher level of technical expertise, potentially limiting its accessibility to a wider audience of active users.

Pipeline Versioning Issues

One of the more nuanced challenges with Apache Airflow is its lack of built-in support for pipeline versioning. Without native mechanisms to track changes or rollback to previous versions of workflows, users must rely on external version control systems to manage their pipelines. This can complicate the process of diagnosing and troubleshooting issues that arise from changes in the source data or pipeline configurations.

Implementing an effective versioning strategy is essential for maintaining control over the evolution of data pipelines and ensuring their reliability over time.

Summary

In sum, Apache Airflow emerges as a formidable ally in the quest for streamlined data workflow orchestration. Its rich feature set, robust architecture, and integrative capabilities make it an indispensable tool for data engineers and scientists seeking to author, schedule, and monitor complex data pipelines. While mindful of its limitations, particularly in streaming data and user accessibility, Airflow’s contributions to the field are undeniable. It is this blend of power and flexibility that positions Airflow as a cornerstone of successful data engineering practices, inspiring data professionals to continually push the boundaries of what’s possible with their data workflows.

Savvbi can use Airflow as a part of data pipelines, and data integrations

Frequently Asked Questions

Is Airflow similar to Jenkins?

No, Airflow and Jenkins differ in terms of integration capabilities, workflow orchestration, scalability, monitoring, community/ecosystem, and ease of use, so it’s important to choose the right tool based on specific needs and requirements.

What is Apache Airflow primarily used for?

Apache Airflow is primarily used for orchestrating complex workflows, particularly ETL processes, machine learning model training, and batch processing jobs, offering precision and efficiency for data engineers.

Can Apache Airflow handle real-time data streaming?

No, Apache Airflow is designed for managing batch processing tasks, not for handling real-time data streaming. For real-time data streaming, tools like Apache Kafka or Apache Flink are more suitable.

How does Apache Airflow integrate with cloud services?

Apache Airflow integrates with cloud services like AWS, Google Cloud, and Azure through hooks and operators, facilitating seamless workflows across cloud ecosystems. This extensive support enables integration with services such as cloud-based data lakes, storage, and analytics, making it easier to manage tasks and processes efficiently.

Is Apache Airflow suitable for non-developers?

Apache Airflow may not be suitable for non-developers due to its steep learning curve and the requirement for programming knowledge, particularly in Python, to effectively manage workflows. Consider alternative tools that are more user-friendly for non-developers.